[TOC]

YOLO

-

Darknet: Open Source Neural Networks in C

-

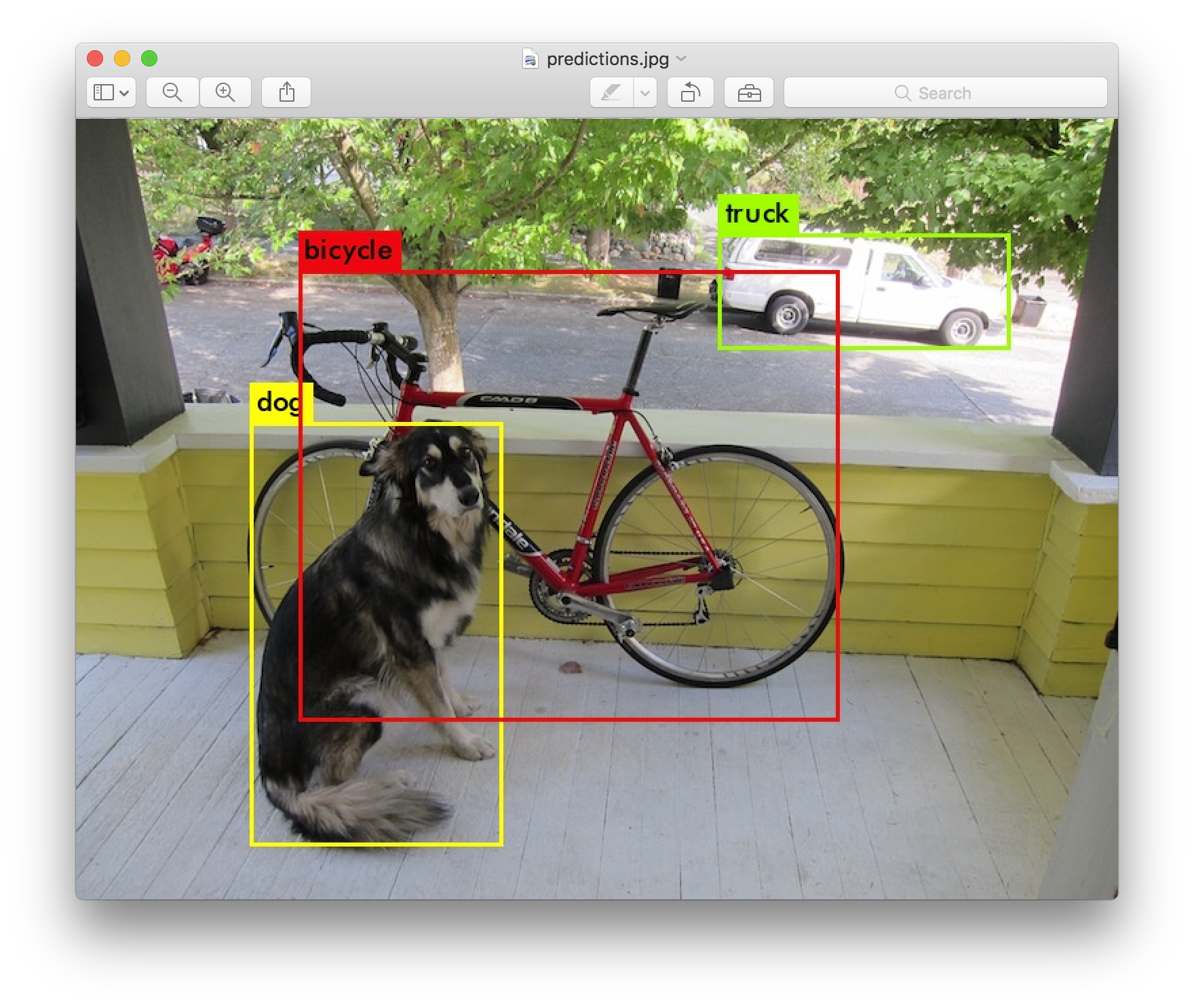

YOLO: Real-Time Object Detection

Build / Install

Detection Using A Pre-Trained Model

./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

- output

layer filters size input output 0 conv 32 3 x 3 / 1 608 x 608 x 3 -> 608 x 608 x 32 0.639 BFLOPs 1 conv 64 3 x 3 / 2 608 x 608 x 32 -> 304 x 304 x 64 3.407 BFLOPs 2 conv 32 1 x 1 / 1 304 x 304 x 64 -> 304 x 304 x 32 0.379 BFLOPs 3 conv 64 3 x 3 / 1 304 x 304 x 32 -> 304 x 304 x 64 3.407 BFLOPs 4 res 1 304 x 304 x 64 -> 304 x 304 x 64 5 conv 128 3 x 3 / 2 304 x 304 x 64 -> 152 x 152 x 128 3.407 BFLOPs 6 conv 64 1 x 1 / 1 152 x 152 x 128 -> 152 x 152 x 64 0.379 BFLOPs 7 conv 128 3 x 3 / 1 152 x 152 x 64 -> 152 x 152 x 128 3.407 BFLOPs 8 res 5 152 x 152 x 128 -> 152 x 152 x 128 9 conv 64 1 x 1 / 1 152 x 152 x 128 -> 152 x 152 x 64 0.379 BFLOPs 10 conv 128 3 x 3 / 1 152 x 152 x 64 -> 152 x 152 x 128 3.407 BFLOPs 11 res 8 152 x 152 x 128 -> 152 x 152 x 128 12 conv 256 3 x 3 / 2 152 x 152 x 128 -> 76 x 76 x 256 3.407 BFLOPs 13 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 14 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 15 res 12 76 x 76 x 256 -> 76 x 76 x 256 16 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 17 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 18 res 15 76 x 76 x 256 -> 76 x 76 x 256 19 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 20 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 21 res 18 76 x 76 x 256 -> 76 x 76 x 256 22 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 23 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 24 res 21 76 x 76 x 256 -> 76 x 76 x 256 25 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 26 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 27 res 24 76 x 76 x 256 -> 76 x 76 x 256 28 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 29 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 30 res 27 76 x 76 x 256 -> 76 x 76 x 256 31 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 32 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 33 res 30 76 x 76 x 256 -> 76 x 76 x 256 34 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 35 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 36 res 33 76 x 76 x 256 -> 76 x 76 x 256 37 conv 512 3 x 3 / 2 76 x 76 x 256 -> 38 x 38 x 512 3.407 BFLOPs 38 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 39 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 40 res 37 38 x 38 x 512 -> 38 x 38 x 512 41 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 42 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 43 res 40 38 x 38 x 512 -> 38 x 38 x 512 44 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 45 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 46 res 43 38 x 38 x 512 -> 38 x 38 x 512 47 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 48 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 49 res 46 38 x 38 x 512 -> 38 x 38 x 512 50 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 51 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 52 res 49 38 x 38 x 512 -> 38 x 38 x 512 53 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 54 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 55 res 52 38 x 38 x 512 -> 38 x 38 x 512 56 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 57 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 58 res 55 38 x 38 x 512 -> 38 x 38 x 512 59 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 60 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 61 res 58 38 x 38 x 512 -> 38 x 38 x 512 62 conv 1024 3 x 3 / 2 38 x 38 x 512 -> 19 x 19 x1024 3.407 BFLOPs 63 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 64 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 65 res 62 19 x 19 x1024 -> 19 x 19 x1024 66 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 67 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 68 res 65 19 x 19 x1024 -> 19 x 19 x1024 69 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 70 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 71 res 68 19 x 19 x1024 -> 19 x 19 x1024 72 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 73 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 74 res 71 19 x 19 x1024 -> 19 x 19 x1024 75 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 76 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 77 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 78 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 79 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs 80 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs 81 conv 255 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 255 0.189 BFLOPs 82 yolo 83 route 79 84 conv 256 1 x 1 / 1 19 x 19 x 512 -> 19 x 19 x 256 0.095 BFLOPs 85 upsample 2x 19 x 19 x 256 -> 38 x 38 x 256 86 route 85 61 87 conv 256 1 x 1 / 1 38 x 38 x 768 -> 38 x 38 x 256 0.568 BFLOPs 88 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 89 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 90 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 91 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs 92 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs 93 conv 255 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 255 0.377 BFLOPs 94 yolo 95 route 91 96 conv 128 1 x 1 / 1 38 x 38 x 256 -> 38 x 38 x 128 0.095 BFLOPs 97 upsample 2x 38 x 38 x 128 -> 76 x 76 x 128 98 route 97 36 99 conv 128 1 x 1 / 1 76 x 76 x 384 -> 76 x 76 x 128 0.568 BFLOPs 100 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 101 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 102 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 103 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs 104 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs 105 conv 255 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 255 0.754 BFLOPs 106 yolo Loading weights from yolov3.weights...Done! data/dog.jpg: Predicted in 18.148226 seconds. dog: 100% truck: 92% bicycle: 99% -

fixed CUDA Error with the cfg code below

out of memory

# cfg/yolov3.cfg batch=16

配置文件 yolov3.cfg 内容如下

[net]

batch=64 # 每batch个样本更新一次参数。

subdivisions=8 # 如果内存不够大,将batch分割为subdivisions个子batch,每个子batch的大小为batch/subdivisions。

# 在darknet代码中,会将batch/subdivisions命名为batch。

height=416 # input图像的高

width=416 # Input图像的宽

channels=3 # Input图像的通道数

momentum=0.9 # 动量

decay=0.0005 # 权重衰减正则项,防止过拟合

angle=0 # 通过旋转角度来生成更多训练样本

saturation = 1.5 # 通过调整饱和度来生成更多训练样本

exposure = 1.5 # 通过调整曝光量来生成更多训练样本

hue=.1 # 通过调整色调来生成更多训练样本

learning_rate=0.0001 # 初始学习率

max_batches = 45000 # 训练达到max_batches后停止学习

policy=steps # 调整学习率的policy,有如下policy:CONSTANT, STEP, EXP, POLY, STEPS, SIG, RANDOM

steps=100,25000,35000 # 根据batch_num调整学习率

scales=10,.1,.1 # 学习率变化的比例,累计相乘

[convolutional]

batch_normalize=1 # 是否做BN

filters=32 # 输出多少个特征图

size=3 # 卷积核的尺寸

stride=1 # 做卷积运算的步长

pad=1 # 如果pad为0,padding由 padding参数指定。如果pad为1,padding大小为size/2

activation=leaky # 激活函数:

# logistic,loggy,relu,elu,relie,plse,hardtan,lhtan,linear,ramp,leaky,tanh,stair

[maxpool]

size=2 # 池化层尺寸

stride=2 # 池化步进

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

......

......

#######

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[route] # the route layer is to bring finer grained features in from earlier in the network

layers=-9

[reorg] # the reorg layer is to make these features match the feature map size at the later layer.

# The end feature map is 13x13, the feature map from earlier is 26x26x512.

# The reorg layer maps the 26x26x512 feature map onto a 13x13x2048 feature map

# so that it can be concatenated with the feature maps at 13x13 resolution.

stride=2

[route]

layers=-1,-3

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=125 # region前最后一个卷积层的filters数是特定的,计算公式为filter=num*(classes+5)

# 5的意义是5个坐标,论文中的tx,ty,tw,th,to

activation=linear

[region]

anchors = 1.08,1.19, 3.42,4.41, 6.63,11.38, 9.42,5.11, 16.62,10.52 # 预选框,可以手工挑选,

# 也可以通过k means 从训练样本中学出

bias_match=1

classes=20 # 网络需要识别的物体种类数

coords=4 # 每个box的4个坐标tx,ty,tw,th

num=5 # 每个grid cell预测几个box,和anchors的数量一致。当想要使用更多anchors时需要调大num,且如果调大num后训练时Obj趋近0的话可以尝试调大object_scale

softmax=1 # 使用softmax做激活函数

jitter=.2 # 通过抖动增加噪声来抑制过拟合

rescore=1 # 暂理解为一个开关,非0时通过重打分来调整l.delta(预测值与真实值的差)

object_scale=5 # 栅格中有物体时,bbox的confidence loss对总loss计算贡献的权重

noobject_scale=1 # 栅格中没有物体时,bbox的confidence loss对总loss计算贡献的权重

class_scale=1 # 类别loss对总loss计算贡献的权重

coord_scale=1 # bbox坐标预测loss对总loss计算贡献的权重

absolute=1

thresh = .6

random=0 # random为1时会启用Multi-Scale Training,随机使用不同尺寸的图片进行训练。

Training YOLO on VOC

Yolo for ROS

-

https://github.com/leggedrobotics/darknet_ros

-

YOLO ROS: Real-Time Object Detection for ROS

cfg files

-

darknet_ros.launch

<!-- darknet_ros.launch --> <!-- ROS and network parameter files --> <arg name="ros_param_file" default="$(find darknet_ros)/config/ros.yaml"/> <arg name="network_param_file" default="$(find darknet_ros)/config/yolov3.yaml"/> -

ros.yaml

# ros.yaml subscribers: camera_reading: topic: /camera/zed/rgb/image_rect_color queue_size: 1

Build

cd ws_yolo/src

git clone --recursive git@github.com:leggedrobotics/darknet_ros.git

cd ../

catkin_make -DCMAKE_BUILD_TYPE=Release

# or

catkin build darknet_ros -DCMAKE_BUILD_TYPE=Release